I use an app called Daylio as a diary and a mood tracker. It’s really good! I recommend it.

I also use an app called Habit to track things I want to do every day, like “stay off social media” or “work on a project”.

Recently, Daylio added a new ‘Goals’ feature, which pretty neatly replaces the functionality of Habit, while integrating into Daylio’s rather nice stat tracking stuff. Of course, there’s no “import Habit data” button, but both apps support exporting data in either human-readable or backup formats - how hard could it be to migrate the data over?

Not that hard, it turns out! I had a fun time with it, too. Here’s what I did.

The .daylio file format

Daylio provides two options for exporting your data - either as a backup file

(with a .daylio extension) or as a PDF or CSV. It does warn you that the latter

two options can not be re-ingested into the app; that is, we can’t edit a CSV

and then import it again. The only real option is to somehow edit the backup.

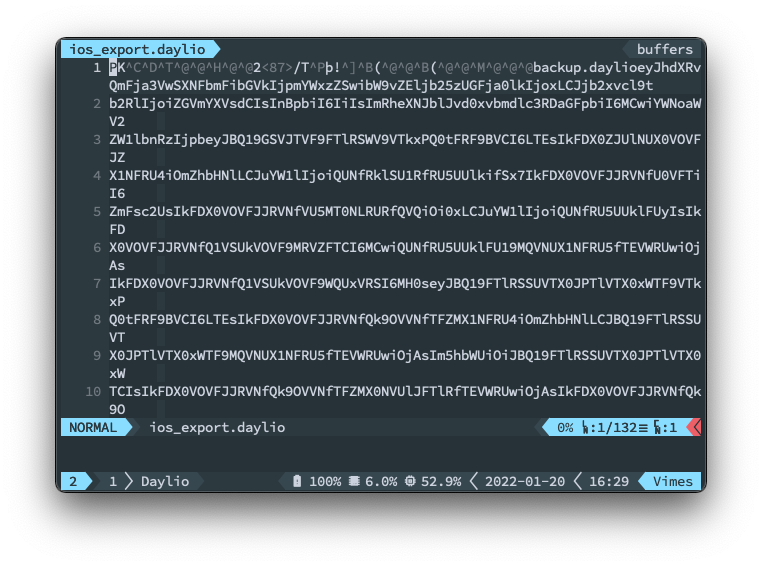

First things first, let’s try and open the file in a text editor and hope against all reason that it’s JSON or something.

Bum. That looks like binary. I really do not want to get into

reverse-engineering binary formats from a hexdump or similar; I’m just not smart

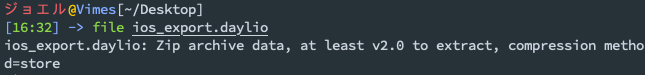

enough. Let’s see what file thinks about it.

It’s a zip file with a silly extension! Fabulous. Let’s extract it and see what the craic is.

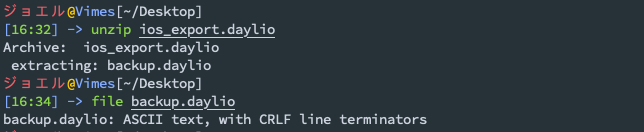

The archive contains another .daylio file, though we can see that thankfully

this one isn’t a zip archive. I’m using a blank Daylio export for the purposes

of not leaking my “dear diary” entries; if you’ve attached photos to any Daylio

entries these appear in an assets/ directory inside the archive.

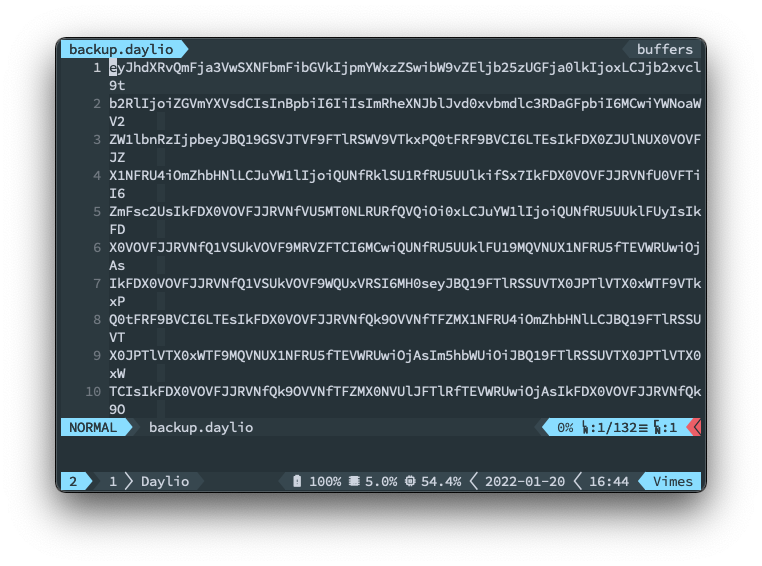

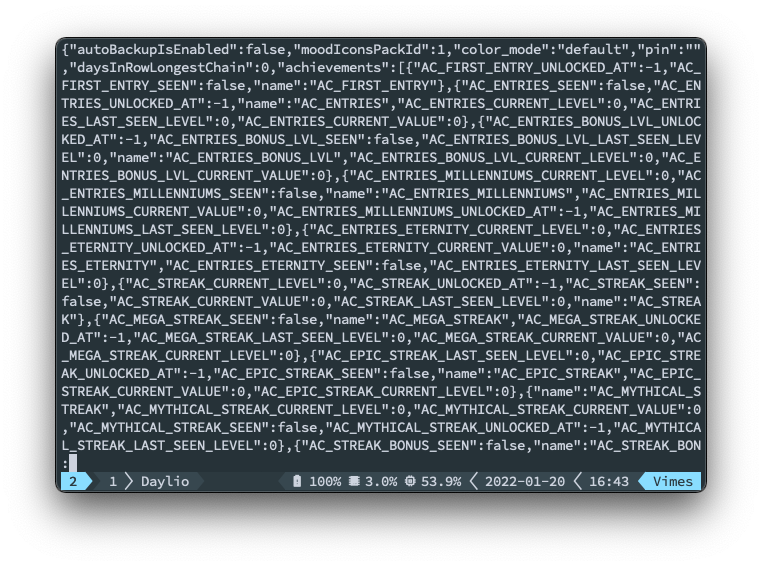

Let’s open up the new .daylio file. I hope it’s JSON or something.

Alas! Foiled again. However, this one is just text rather than Vim attempting to render binary. In fact, it looks an awful lot like Base64. Let’s see what happens if we run it through a decoder.

That looks like JSON. Excellent! We’re in business. I don’t know what it says about me that I can recognise Base64 just by looking at it, but it can’t be anything good.

dayligo

The next step is to write some software to parse the Daylio JSON and allow us to edit it. I ended up going with Golang for this - not for any special reason, I just like it.

The resulting library, including the program to import the Habit entries into Daylio, is on GitHub at JoelOtter/dayligo, a name I am unjustifiably pleased with.

I’m not going to go through the code in great detail, because that would be very boring. Instead, I encourage you to have a look through if you’re interested. Here are a few things I thought were worth noting.

Backup JSON format

Daylio backups use three different root-level objects for describing goals:

"goals"- the goals themselves. These can be named, or linked to a “tag”. Tags are “things you did on a day”, e.g. “projects” or “exercise”."goalEntries"- these represent a “completed” goal, with an associated goal ID and timestamp."goalSuccessWeeks"- these represent weeks of the year in which a goal was fully completed. There’s a bit of weirdness to this as a goal aim can be every day, such as in habit, or a few other options like “three times a week”.

The structure of the file as represented in JSON is parsed using the types in

structure.go.

The process of reverse-engineering these files was basically to export one, then adjust the clock on my phone to add a load more goal entries. Crucially, Daylio does not allow you to backdate “goal success weeks”, so while it would have been trivial to naively import the habits as goals the “streak” would not have been correct. Given it’s the streak that motivates me, this wasn’t acceptable.

The Habit script

The script for importing the Habit files lives at cmd/import-habit. It doesn’t

do anything particularly clever with the Habit file, which is a plain ol’ CSV.

I did have to cheat a little bit by editing the Habit file so that goal names

matched; getting the script to resolve these in a clever way felt like a waste

of time.

Golang-specific JSON bits

The dayligo import/export process stores an internal string-to-JSON-message map

as well as a reference to a temporary directory. This is because I didn’t want

to define types for every bit of the JSON file, but wanted to be able to marshal

my updated backup back to JSON without losing any of that data. The “Golang way”

to do that is to parse the JSON as map[string]json.RawMessage. The full type

definition of a Daylio backup is as follows.

type Backup struct {

DayEntries []DayEntry `json:"dayEntries"`

Goals []Goal `json:"goals"`

GoalEntries []GoalEntry `json:"goalEntries"`

GoalSuccessWeeks []GoalSuccessWeek `json:"goalSuccessWeeks"`

Tags []Tag `json:"tags"`

Version int64 `json:"version"`

rawMap map[string]json.RawMessage

tempDirPath string

}

The functions for importing and exporting these .daylio files (the full archive

ones) live in file.go.

ID ordering

There’s some assorted oddness with how the IDs get ordered in the Daylio entries; they appear to in some cases have IDs assigned by newest first. I hope this is just an artifact of the export process and internally it is formatted somehow differently; re-assigning every ID on a new entry would be a crime against God. I sought to emulate this ordering where possible, but I suspect it doesn’t actually make a difference to the import.

ISO week

Goal success weeks have a “week” number assigned to them. I found this quite confusing at first, but I believe it’s actually ISO week, a concept I’d not heart of before; thankfully Golang provides functionality to get this and the year the week “belongs” to. Go’s time handling stuff remains quite impressive.

I think that’s all the interesting bits. Interesting to me, at any rate. Have a nice day.